Generation to Communication

Now that we have thoroughly discussed the nature of the symbolic packages that are exchanged during communication, we need to talk more about the actual process of that transfer. It seems best to work our way forward from an initial set of primitives (both synchronic and diachronic).

Generator

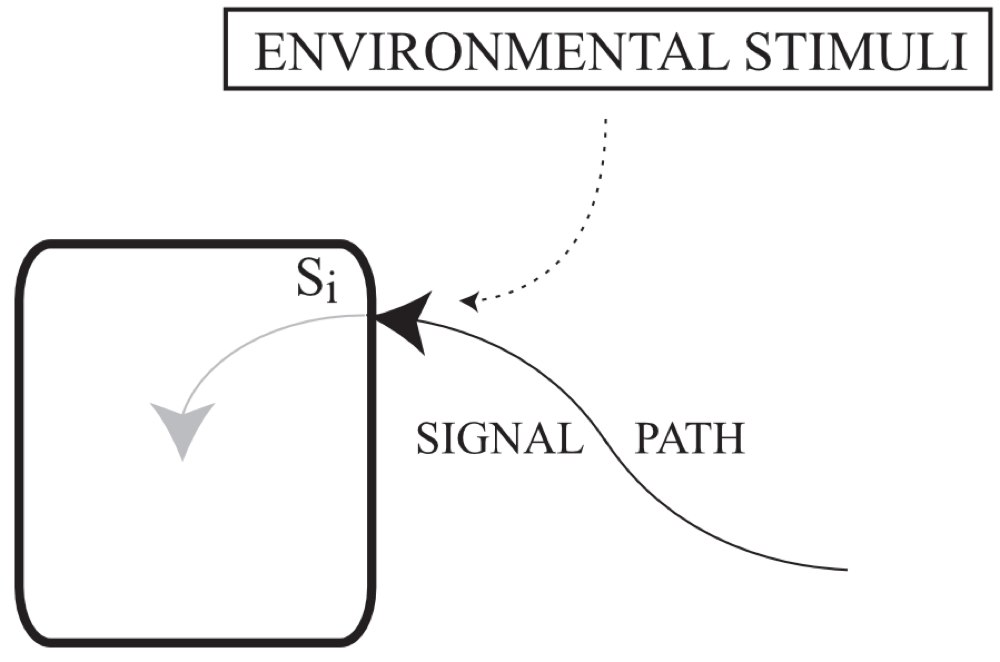

We are going to start with one potential participant that we will identify as a generator:

Generator

Imagine an entity (the rounded rectangle profiled with a bold border) that is emanating some sort of information (So) across a range of directions into its environment. Broadly stated, that emanation could be light, sound, heat, touch pressure, and so on, or their combinations.

A signal is any part of an emanation that:

a. incorporates some type of orderly distinction in its form (such as a pattern of intensity, duration, and so on)

b. where that distinction represents further information about some entity or other (i.e., a meaning).

In contrast, noise (the dotted arrow) is anything that obscures that signal’s distinction between form and meaning. That balance is their signal-to-noise ratio, and any part of that noise that conveys its own meaning is known as crosstalk.

As discussed above in the section on iconicity, a signal is iconic when the distinction in its form is concretely associated with a meaning; that is to say, a signal would be more iconic if its sheer amplitude represented increased size, or if faintness of the signal represented a similar weakness. Towards the other end of that spectrum, a signal is more symbolic when that relationship between form and meaning is abstract; for example, let’s consider words that mean [LOUD EXPLOSIVE NOISE]. The spoken word “discharge” is not as iconic as the word “pop,” where the latter has an explosive release of /p/ into the open vowel /a/. And the word “POP” is even more iconic (written or spoken, with signed equivalence) than “pop” is when it represents a particularly loud explosive noise.

At this point, we are already letting examples seep in that are specifically linguistic in nature (rather than just communicative), because their clear illustrative value outweighs the need to keep this discussion on a more general footing about broadly environmental events. As we progress, we will talk more about the specific involvement of brains in this process of form-meaning association; that is to say, while some types of signals are identified with intentional communication (as in sign or speech), others are not (as in cell signaling, or plants communicating through volatile organic compounds… probably). Yet others occupy some sort of middle ground (as in ant versus human pheromones). This aspect of the discussion, though, can safely be deferred until later in the tutorial.

For our purposes, forms (including those of emanations) have power and precision. Power is the absolute intensity of an individual form at a given point in time, and precision is the relative intensity of one form compared to another from which it is distinct (in space, time, or both). These functions are both types of prominence.

Receiver

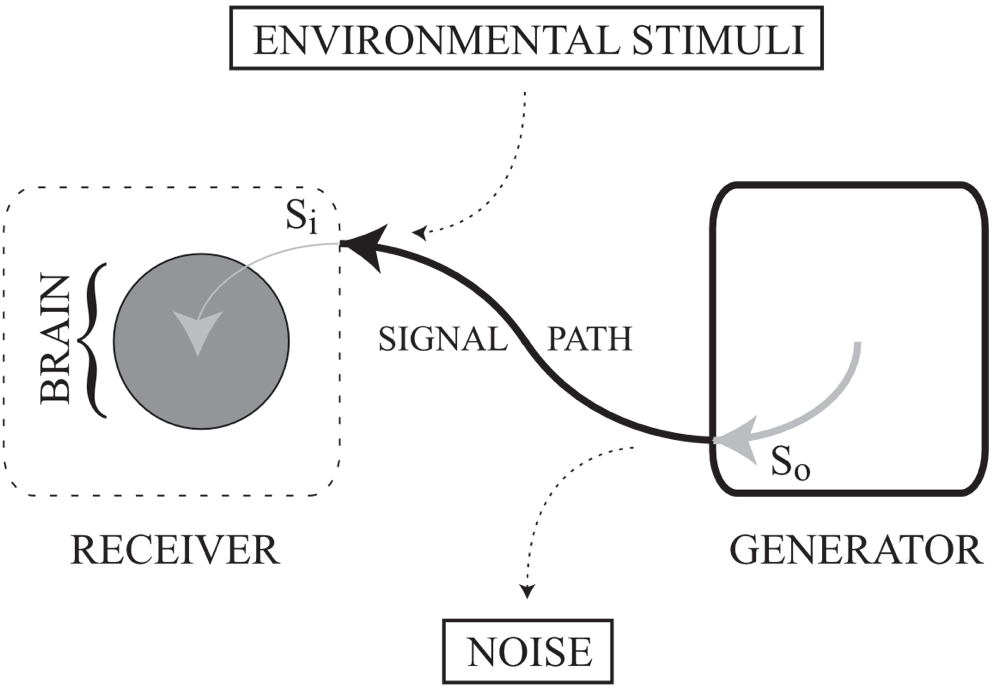

Now we add a second participant, namely a receiver:

Receiver

The profiled entity is absorbing some sort of information (Si) from various directions in its environment. Sometimes that acquisition is just some amount of environmental noise (the dotted arrow), but it can also be a signal that has described a path from a generator (the solid black arrow).

While we are primarily concerned with acquisitions that the receiver is actually processing (the solid grey arrow), the emanation might just be absorbed or reflected without significant consequence (i.e., without any meaningful change or effect). Some physical experiences are not detected by the receiver’s sensory array (whether those signals originate externally or internally).

Receiver

Generation and Reception

This is what the model looks like when these two components are combined, and the receiver is cast as an entity with a brain:

Generation

Reception

In the first case, generation is profiled, and in the second, we profile reception.

We added the brain because we are working towards a specific model of people communicating with one another, despite the fact that there are less intentional versions of the process that are also called communication (such as a door that communicates into an adjacent room, a metropolis with efficient rail communication, or even the earlier plant example… although we are currently noncommittal about ants). Similarly, the diagram locates input higher than output only due to the specist conceit that human receptors tend to be gathered upward in the head, whereas their articulators tend to be arranged lower than that.

When a signal encounters an intermediate entity on the way to a receiver (e.g., light passing through glass on the way to an eye, sound reflecting off of a hard surface on the way to an ear, pressure being felt through clothes, and so on), and there is no change in the distinct part of its form that represents a meaning (e.g., when a reflection remains unadulterated, or a medium is transparent to a signal, and so on), then there is no change in meaning, so we are not going to unnecessarily complicate the diagrams to include such inconsequential events along the signal path. (This tutorial takes no substantive stance on the status of relayed telepathy.)

Sensation

Imagine, then, an emanator that is banging air molecules together, radiating light wavicles, or otherwise generating output signals (So). Some of that energy traces a signal path intersecting at least one sensor in a receiver’s array:

Sensation

Humans are typically able to absorb many kinds of input, whether conveyed by electromagnetic radiation, volatilized chemical compounds, or mechanical energy traveling through such media as air, water, or the ground. While some of those emanations originate from outside of the receiver’s body, others are internal, and a clear description of communication requires us to sort some of that out. There is no need to memorize the following terms, but the knowledge should (eventually) contribute to the cognitive model that you are constructing.

The special sensory receptors are the organs in the head that detect light (photoreceptors in the eyes), sounds (mechanoreceptors in the ears), and smells and tastes (chemoreceptors in the nose and mouth). These special sensors are exteroceptive, in that they act as environmental interfaces. This cephalization of the special sensors keeps them near the brain, which cuts down on processing time and improves sensory integration.

There are also special sensors whose environmental interface is not as discrete or ”surfacey” as that of the others. Mechanoreceptors in the vestibular system detect changes in the head as it is influenced by gravity, namely in terms of angular and linear acceleration in three spatial dimensions (i.e., rotation and translation in space).

Moving the effective location of your special sensors can equally dislocate a primary chunk of your sense of self, as is leveraged in virtual reality (VR) systems; for example, if you (are a sighted person and you) wear a VR helmet rigged to a remote camera that is viewing your body from the side, then your sense of self relocates with the camera and you will experience a (sometimes quite significant) delay in your identification of yourself as the VR-gear-wearing person whom you are viewing. This can vary by person, depending on their investment in their visual experience of their environment; in other words, some people are more like sighthounds, some are more like scenthounds, and so on.

A distinction tends to be made between these special cephalic organs and the general or somatic sensors whose receptors are distributed elsewhere than your κεφάλι, where that more general set is subdivided as follows:

• exteroceptive skin sensors to detect pain, pressure, temperature, touch, and tension; and

• internal organ sensors that are either:

◦ proprioceptive receptors in the joints, ligaments, muscles, and tendons that detect muscle tension (proprioceptors), so as to sense movement strength and body part position (and balance, when integrated with information from the vestibular system), or

◦ visceroreceptive internal organ receptors that detect blood pressure (pressoreceptors), stretching (i.e., stretch receptors in the bladder, lungs, and stomach), and chemical concentrations in the blood and tissues (e.g., chemoreceptors for carbon dioxide, glucose, oxygen, and so on).

Nociceptors (for pain) and thermoceptors are found both in the skin and in some internal organs, and are classified accordingly (as whichever-ceptive of the two choices).

The independent relocation of the somatic sensors (i.e., not dependent on relocating the special sensors) is a contemporary technological grail. If you can figure out how to make it seem like a person’s sense of self is relocating to a remote touch site, the world will (virtually) beat a path to your door.

Interoception is an emergent property that represents a sense of the body’s internal state, accepting input from all of the following systems: cardiovascular, endocrine, immune, gastrointestinal, genitourinary, nociceptive, respiratory, thermoregulatory, and affective touch. These all contribute to the maintenance of homeostasis and a sense of self. It is not surprising, then, that diversity in such a broad system has been proposed to influence a host of disorders, including PTSD, OCD, ASD, anxiety, panic, eating, and more.

The sensation of an environmental signal is always a local consequence of a remote event, relative to your actual body. You don’t really see lightning or hear thunder “way over there,” but rather directly on your retina and cochlea, and then in your brain. (This is one reason that VR works so well, namely that you are already geared to interpret local events as if they were remote.) Even with touch, the pressure itself, though immediate to the surface layer of the skin, is minutely remote from the sensors below that surface. So remember this next bit well:

Your brain’s portrayal of your environment is no farther away than the boundaries established by your sensor array.

Period.

To sum things up: 1) light and sound are the most common forms for communication signals (with the rest of the forms, such as volatile chemicals, tending to be treated as alternatives or ancillaries), and 2) interoception will be discussed later as an important aspect of meaning.

Transduction

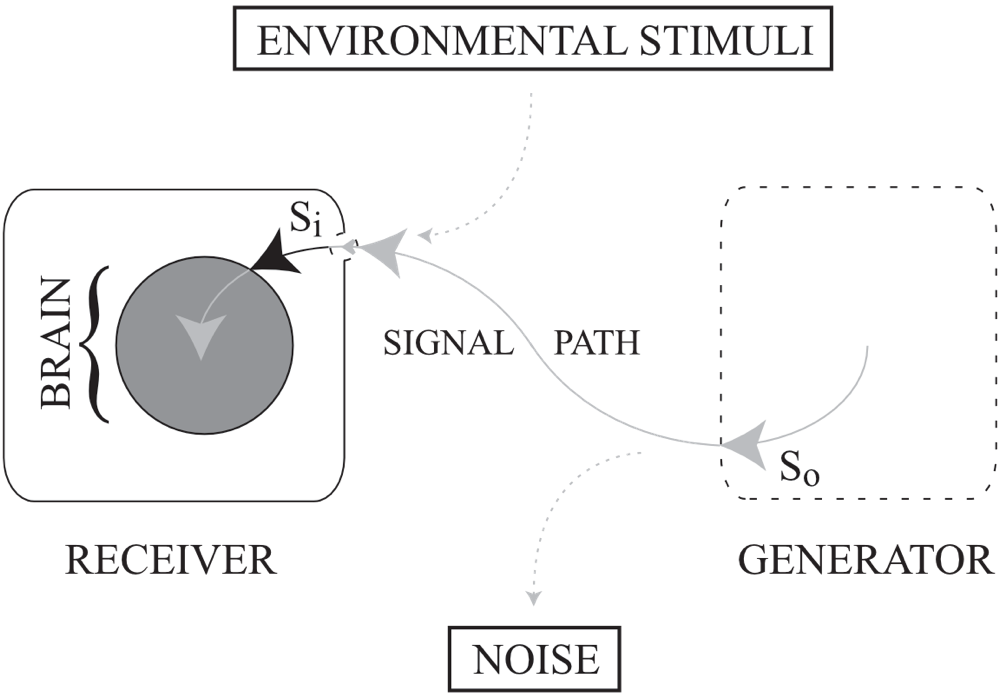

As sensors acquire a (noisy) signal, they transduce it (electro)mechanically from the energy of origin (i.e., electromagnetic, chemical, and so on) into electrochemical energy that the body can process (So → Si):

Transduction

Roughly, then, sensation will be a signal’s detection, including the immediate transduction which aids that reception (i.e., the reaction of rods, cones, cochlear nerves, and so on). While a functional sensory system can filter out some noise, a dysfunctional one can introduce more.

That’s another important bit to remember for our intensely special students.

Perception

Perception, it follows, will be any transduction and transmission of the new, local signal beyond the sensory organ; in other words, this process gets the electrochemical signal from a sensory organ to the brain, including its reception there as a percept (i.e., the solid grey arrow in the brain):

Perception

As with sensation, perception can affect the signal-to-noise ratio.

There is a great deal of controversy surrounding the brain’s boundaries (so there is no symbol on the diagram to represent the creation of the percept), but that is beyond the scope of this work, and definitions that precise have no critical bearing here. In short, there is now a brain receiving a palatable form of a (preferably less noisy) signal that was originally generated in the receiver’s environment (So → Si → brain).

Cognition

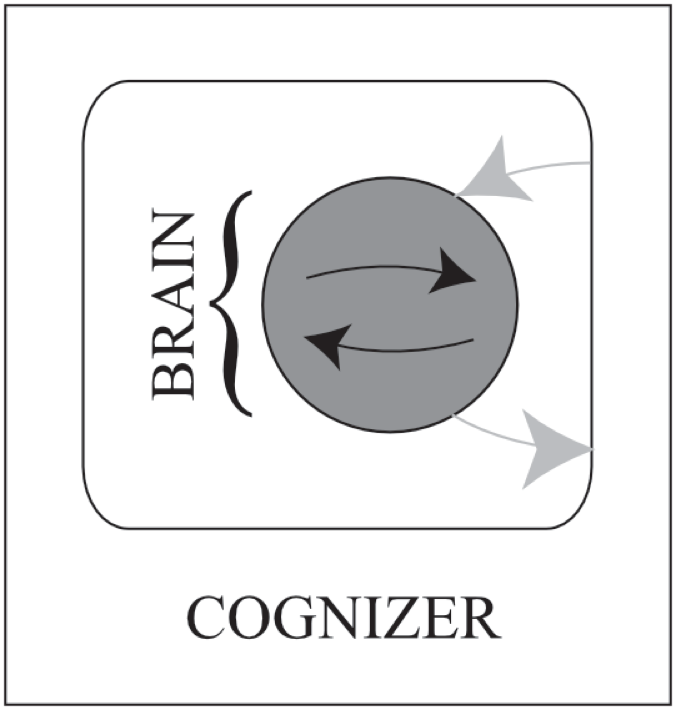

What the brain does with these percepts will be a part of cognition:

Cognition

To clarify:

• the actual signal to the brain’s (fuzzy) boundary is assigned to perception (i.e., upper grey arrow),

• the output beyond the brain is a type of (electrochemical) signal generation (i.e., lower grey arrow), and

• what happens to percepts within the brain is circumscribed here as cognition (i.e., the dark arrows), some parts of which are more automatized than others.

Cognition is the place where plans are made to associate forms with meanings.

Cognition is such a complex topic that its discussion is distributed through the rest of this material, including a part of cognition called conceptualization, which is a sort of cognitive modeling or volitional imagery (as introduced earlier in this tutorial); for example, cognition routinely supports distortions (such as illusions) that only seem as though they were accurate models of external, objective reality (e.g., blindspot interpolation); in addition, cognition processes a lot more than just percepts that arrive “from the outside world,” where the sensory experience is not directly associated with immediate environmental events (e.g., hallucinations, dreaming, and so on, where hypnogogic and hypnopompic states are normal).

Power, Precision, and Prominence

While that is happening, this is what we want you to know about these specific cognitive functions:

• The complex set of functions that evaluate a signal’s power (i.e., its absolute intensity) will be treated here as if they were a single entity, which will also be called power; likewise,

• the set of functions which evaluates a signal’s precision (i.e., relative power) will be treated as a unitary entity also called precision (cf. “comparison”; Langacker, 1977: 100), and

• at this point, prominence will be the evaluation of a signal’s intensity as high (so we will use “salience” with a more general meaning).

Power transitively evaluates the absolute intensity of that signal’s generator (i.e., if there is power in the signal, and the signal came from the generator, then the generator had power in it); likewise; those cognitive processes which evaluate the precision between two or more such signals are evaluating the relative power of those signals’ generators (which might be just one generator at different points in time). In short, a signal’s form reveals information about its generator. Any receiver that is doing a poor job of evaluating a signal’s intensity is likewise poorly evaluating the generator’s properties.

Later on, we will show that this equation holds whether these functions are evaluating:

• the direct (i.e., proximal) experience of a signal, such as the sensation of the impact when catching a ball; or

• the indirect (i.e., distal) experience of a signal, such as the sensation associated with only remembering such an impact.

Either way, this amounts to the perception of:

• the absolute size or strength of an entity (its power), such as a perceived emotion, color, or threat, and

• the relative proportions of multiple entities (their precision), such as perceptual changes in general, or the relative hugeness or imminence of a threat in specific.

In their most primitive aspects, power and precision are essential functions across chordate behavior, whether or not vermiforms (for example) are actually suggested as providing the evolutionary source for these functions in human cognition.

Research suggests that these functions are necessary for semantic processing, which we describe in that section on semeiognomy; in fact, these functions are portrayed as fundamental cognitive abilities evaluating perceptual events in general (Langacker, 1987: 100ff; Bolinger, 1986: ch. 6f; Mansfield, 1997; just to begin with).

Mirror Neurons

Mirror neurons are not different in structure from other neurons, but they act together to provide a special function. These neurons learn to fire when we experience someone or something else doing something. They are found in such brain areas (monkey or human, depending) as those associated with: planning spatial and sensory guidance of movement; control of movement; the main sensory receptive area for touch; sensory integration for spatial sense and navigation; and in humans also the area for triggering “go/no go” tasks, risk assessment, and aversion.

Information from mirror neuron processing contributes to:

• a person’s body schema, which is their integrated neural representation of their body;

• their peripersonal space map, which is their model of the space around their body that is essentially within their physical grasp; and

• their extrapersonal space map, which is their model of reality beyond their physical reach.

Tools can come to be incorporated into body schema and peripersonal space, at which point they are treated as part of one’s body. A typically developing person’s sense of self is strongly associated with their vision, and there are experiments that muck about with vision to disassociate a person’s sense of self from their actual physical location (as described above). There is too much to talk about here, but you can imagine that there are all sorts of sense-of-self variations based on the likes of neglect disorders, amputation, attention disorders, and so on. These representations are normally sensorily integrated, with heavy emphasis on vision and touch, and in cases of impairments, presentation of stimuli in one mode can cause extinction in another (e.g., a strongly unimodal reliance on vision over audition).

Myth: Swiss Cheese Metaphor

Before proceeding, we should touch on a myth related to sensation and perception. When it comes to diversity in cortical sensory processing, there is a common-but-mistaken impression that goes by the name of “the Swiss cheese metaphor.” This description is often used to illustrate such impairments specifically in visual processing, but vision is only one aspect of image formation. The expression is supposed to convey the notion that a person can see and understand some parts of a scene but not others, where those ‘holes’ might move around. It makes it seem like some parts of a person’s eyes work better than others, as if the person might be expected to reliably see and understand things that are always presented in a specific, functional area of their field of vision (which might not exist). Simply put: that’s wrong.

Here’s what you should learn instead:

• Information comes at us from the environment (including our own bodies) in different ways (i.e., various types of vision, hearing, touch, olfaction, gustation, proprioception, and so on).

• Typically, we filter noise out of those signals.

• We pull a signal together to make a usefully accurate model of the environment.

• This system is enormously complex, and a person’s compromised system might fail or succeed in any number of different ways, and it might do so inconsistently.

• So, unlike Swiss cheese, a person’s challenged cortical subsystems are not going to be composed of discrete, reliably bad/good areas of failure/success.

• Therefore, when you are trying to get a particular meaning across to someone whose sensory subsystems are not typical, try more than one modality, try multiple times, and don’t expect the same thing to work every time; in other words, you’re not just trying to toss a marble through a stable hole in the Swiss cheese.

Which is our segue to…

Articulation

Now take a look at all of this from the perspective of a cognizer taking on the role of a generator, where that generator will soon come to be discussed as it represents a speaker or signer (S):

Articulation

Having processed the percept into palatable input for the brain, the cognizer then formulates a meaning into motor plans (and other associated signals), and some of those are sent to the body’s articulators. Just planning to move is associated with bodily sensations, and this formulation involves narrowing down a wide range of merely potential plans. (Jerking in association with simply thinking about moving is a thing. Being a jerk in association with thinking about moving is a different thing.)

The action of articulation in the diagram above begins when the internal electrochemical signal leaves the brain, and continues as it becomes bodily motion, which essentially releases another signal into the outside world (So), ready to be picked up by something else as an input signal (Si).

Properties of intensity, as conceived by the generator, can be encoded iconically into their signals (e.g., a powerful signal might represent the strength of an emotion associated with a particular meaning), which will then affect the intensity of the articulation when rendering that signal. One of the broad differences between general communication and language in specific is that the latter tends to be less concrete/iconic, and more symbolic/arbitrary.

So the articulating individual can vary the amount of power that they put into the generation of their signal, which can then be received as proximal or distal. To quote Grover:

“First, this is near. Right here, near. Mhm! [Grover runs from his proximal location to a distal one.] This is FAAAAAR.” (First witnessed by the quoting author as: “Near and far.” Sesame Street, created by Jim Henson, performance by Frank Oz, season 1, episode 57, PBS, as aired in January, 1970. Sponsored by the letters ‘i’, ‘p’, and ‘u’, and the numbers ‘4’ and ‘5’.)

Note the iconicity in the spoken version of “FAAAAAR,” as well as in the written one, namely the combined capitals, italics, and repeated letters to represent a significantly increased intensity of signal distance.

In the case of proximal reception of a signal, the evaluation is direct; that is to say, if you throw a rock to/at me (where that throw is an example of articulation), then I can directly evaluate its impact as a proximal event simply by actually feeling it. This measure is precise even if I have never had any experience with catching rocks (or being hit by them), because my evaluation is made after the signal’s direct reception, and is based solely upon the energy of the impact (i.e., the articulatory energy put into the signal’s energy) rather than any previous experience of my own. The same goes for the proximal reception of any sensory signal.

In comparison, I can indirectly evaluate the strength of that same signal as a distal event (i.e., without actually feeling it) by observing its generator (pitcher, speaker, signer, or whatever). Did the gesture appear efficient or wasteful? Did the articulation seem to be energetic? Was the motion terse and concentrated, or sustained and broadcast? How has it felt to me when I have made the same sort of gesture? The accuracy of this indirect measure must rely upon my previous experience (learned or innate) with similar events, where this experience can be as specific as my having thrown a rock to/at another person, or as general as my often having tossed aside inconsequential objects (or even remarks). The mirror neuron system is integrated with this process.

Digging into more deeply buried experience (for use in a distal evaluation) requires greater effort, and there are ways in which language can be spoken or signed with a greater infusion of energy on S’s part that will help the looker or listener (L) know to dredge it up. We are working towards demonstrating how that works (among many other things), and this is just a brief mention to note where some of this is heading.

We stated earlier that power and precision evaluate the intensity of a signal’s generator. Whether that evaluation is proximal or distal, a signal’s form still reveals information about its generator.

And even when the signal is evaluated as distal (i.e., “FAAAAAR”), it is still a local perception of a remote event (i.e., we stated earlier that your personal portrayal of the world is no farther away than the boundaries of your sensory array).

Proximal (direct) attributes should be processed more easily than distal ones, because they are not “interpreted,” and require no access to experience for evaluation; however, they should still contribute to experience afterward to facilitate the processing of future instances of the event, which might be received in their turn as distal signals. In that sense, proximal processing is a primitive precursor of distal processing.

The greater the experiential overlap between generator and receiver (i.e., articulator and perceiver), the greater the likelihood that an indirect evaluation will be accurate, and that the intended meaning will be conveyed without the signal having to be directly intense, which is a relatively wasteful encoding; in other words, if I know that you have been struck by a rock before, then I can just make a gesture to elicit the intended feeling in you. All I have to do is articulate a gesture as if I were going to throw a rock (and maybe not all that powerful an actual gesture at that).

And while we have been using concrete examples for their illustrative clarity, you should understand that these sorts of principles apply to processes that are more symbolic as well. While iconic or indexical gestures have been proposed as the earliest communication units forming the foundation of subsequent linguistic evolution (Armstrong, Stokoe, and Wilcox, 1995), a symbolic sentence (such as this one) might have emphasis (like those very italics) on a signed or spoken form, which is intended to represent an intensity of meaning (i.e., power or precision). Note that communication tends to be more proximal, and language more distal…

…which took a dissertation to explain well (Mansfield; 1997), chunks of which are adapted for this tutorial, when not copied wholesale (which, as the copyright holder, the primary author is allowed to do with a light heart).

Communication

Having set all of this up, the following represents communication:

Communication

In short:

• Some participant begins things by taking on the role of generator, drawing in perceptions from the outside and adding them to their current set of conceptions, then evaluating this conceptual set and articulating a gesture that is typically audible and visible (with ancillary components).

• This expression adds to the environmental input for the next participant, who receives it.

• Lather, rinse, repeat.

The farther we get through this tutorial, the closer we get to showing how that schematic description manifests in terms of an actual conversation.

In this model, then, a participant might sense something huge or intense in their environment, perceive it, and then articulate a signal in response which is also large or intense (maybe to pass along a warning). The reception of this subsequent signal (by some other participant) forms the overlap which closes the coil (in the above diagram) where it was originally open at transduction.

Of course, the only reason that the diagram uses a coil instead of a plain circular loop is because it casually associates each generator with the configuration of a primate head (as mentioned earlier), so the articulators are below the receivers, and this diagram portrays only two participants (essentially facing each other).

Before proceding, it is important to compare our domain-specific definition of communication with its broader range of conventional meanings (i.e., those that are familiar in the community). In its most schematic sense, communication is the dispersion of contents from one container into at least one other. Those contents cannot be shared without traversing the intervening spacetime between the containers, where:

• A warm radiator communicates its thermal energy to its environment.

• An infected arthropod communicates its disease to its victim.

• A vibrant rainbow communicates its colors to its observers.

• A laden railway system communicates its load to its next station.

• A connecting doorway communicates its passers-through to the adjacent room.

We can experience those communicative activities by directly examining each of those types of contents. This theme becomes substantially more complex when those contents are intangible:

• A foreboding sign contains a warning that it communicates to its observers.

• A rabid fan contains an enthusiasm that it communicates to its bystanders.

• An expressive signer/speaker contains a conceptualization that it communicates to its listener/looker.

So our particular definition is compatible with the generic one.

Communication Context

When the generic notion of a “participant” is cast specifically in terms of entities that are taking turns acting as generator and receiver in a communication event, then the diagram represents the context for those participants in that event:

Context (Schematization)

When the role of the generator is cast specifically as a signer/speaker (S) in a language event, and the receiver is likewise identified with a looker/listener (L), then that context becomes known as the ground (as in a type of spatiotemporal reality anchor point):

Ground (Schematization)

We talked about G earlier when we were introducing the use of diagrams. This will all be important when it comes to the discussion of G in regards to setting up a model of reality.

The ground is a critical component in the conventional meanings of words such as: “this,” “you,” “does,” “must,” and “went”; in other words, you should spend some time verifying the reliability of a client’s Theory of Mind (which affects their use of G) before you design therapy that digs into the likes of pronouns and tense/aspect.

Communication from Generation

Written very broadly, then, communication does not exist without some form of participant input and output, followed by a participant’s ability to associate those forms with meanings. This tutorial is working you towards an understanding of what “meaning” means in that sense, and where it came from, because points of origin are usually a good place to look when you are trying to understand a disorder.

Cognitive abilities such as power and precision are developed in an environment which presses the specific need to evaluate the intensity of perceptions; in other words, it is helpful to be able to identify entities that are more powerful than you are, because they might pose a threat (or represent a potent survival resource). Similarly, it is helpful to know if they are relatively less powerful, because food (and the like).

To the degree that communication itself becomes vital for a species in that environment, developing the additional ability to communicate or externalize conceptions or perceptions of prominence (as articulation) would present a great advantage over only being able to process incoming perceptions of prominence. A species has a distinct advantage if it is composed of individuals which can not only perceive danger, but can communicate the imminence of that danger to other individuals. Given the iconic nature of these signals, and the universality of the behavior in reaction to these signals (fight/flight), some universality should also be expected across signal systems such as communication and language.

A directly powerful signal should have its origin in an efficient transfer of articulatory energy from the gesture which generated it, because the alternative would be enormously wasteful, namely where the power of the signal only represents a fraction of the articulatory energy (i.e., it would be a lot of energy spent to create a weak articulation). Such a waste is not conducive to the survival of the generator.

Direct power has its advantages in primitive systems of communication, because the message gets across even when the generator and the receiver have little or no experience in common: signals which either overwhelm or saturate a sensory field will be evaluated as iconic and powerful (i.e., prominent). To avoid waste, the transfer of articulatory energy to signal energy has to be essentially equal. Evaluated as a proximal event, the strength of that signal’s form has an equally intense meaning (again iconic), namely that the sensory field is being overwhelmed or saturated.

Shared experience with this primitive equation promotes the development of communication systems which can rely upon the additional evaluation of signals as distal events, systems like language. When it comes to these more sophisticated signal systems, actual signal strength is not necessarily proportional to meaning, but its perceived articulatory effort is.

The value of language-universal gestures, whether visible (stabbing) or audible (explosion sound-effects), is not readily apparent when removed from a primitive environment (because language-users could simply identify a threat in so many words), but it is clearly valuable at a communication-universal level. Bigness and loudness convey threat and authority, and power (often sheer size) is expressed visibly or audibly with such ferocity that even insects employ such pure representations of threat; for example, Ohala (1983: 7) supports the existence a cross-species “frequency code” which equates a generator’s smallness with high pitch, and its largeness or threat with low pitch and loudness. It’s ultimately a simple matter of physics. (Well, at least for the parts of our shared reality where physics holds sway… and for the purposes of this tutorial we are not concerned with domains where our reality is not “physics all the way down.”)

Given our personal, continual experience with prominence, it seems unreasonable to suggest otherwise than that it was beneficial to adapt an internal resource, namely the cognitive evaluation (or articulation) of prominence, to serve an external purpose: the communication or physical articulation of prominence; for example, it seems reasonable to contend that iconic stress eventually gives rise to communicative and linguistic stress.

When we put this all together (along with research into actual communication data), we gain some insight into the emergence of communication from lower-order entities, both in the development of (a) various species, and (b) those species’ individual members (ancestrally, and throughout their lifespan). We can appreciate the connections between:

• a cobra fanning its hood to appear larger,

• a silverback wreaking sensational havoc in its environment to establish its power,

• S using significant emphasis while saying (or signing the equivalent of), “I am the BOSS around here” (perhaps as accompanied by emphatic gestures), and

• someone who — experiencing a disordered loss for words — “goes absolutely apeshit” to express their authority over a situation.

An understanding of those origins is important because:

• those two patterns of emergence (i.e., a and b, similar to ontogeny and phylogeny) are reflected in one another (albeit this “recapitulation” is neither as simple nor as strict as Haeckel proposed in his “Biogenetic Law”), and

• disorders of complex, higher-order, emergent systems (such as communication or language) are typically based in a disturbance of their lower-order components, such as some aspect of sensation, perception, or cognition.

As this tutorial progresses, we will be looking at some examples, such as what happens when a sensory disability is associated with challenges in the creation of form-meaning pairs, and when disorders of language are rooted in disturbances of communication (or its components).

This is of particular importance to intensely special education because the origins of some of the associated disorders run deeeeep. (Note how truly deep that must be, because we used so many letter ‘e’s to spell it.)